This month I’ve been working on a real time implementation of the Radio Autoencoder (RADAE), suitable for Push To Talk (PTT) use over the air.

One big step was refactoring the core Machine Learning (ML) encoder and decoder to a “stateful” design, that can be run on short (120ms) sequences of data, preserving state each time it is called. The result is a set of command line utilities that can work with streaming audio from a headset or radio. This example demonstrates the full receiver stack: the rx.f32 file (off-air float IQ samples) is decoded to audio samples that are played through your speakers:

cat rx.f32 | python3 radae_rx.py model17/checkpoints/checkpoint_epoch_100.pth -v 1 | ./build/src/lpcnet_demo -fargan-synthesis - - | aplay -f S16_LE -r 16000I spent some time profiling and with a little optimisation, we now have a real time RADAE Tx and Rx that achieves real time encoding and decoding on Desktop and laptop PCs. Quite surprising given it’s still Python code (with the heavy lifting performed in PyTorch and NumPy). With a little more work, we could use these streaming utilities to build a network based RADAE server, a sound card plug in, or a “headless” RADAE system like the ezDV/SM1000.

Our end goal for a RADAE implementation is a C callable library. While low technical risk, a C port is time consuming, and would delay testing the big unknowns in a new speech communication system such as RADAE. There is also the risk of significant rework of the C code if (when) there are any problems with the waveform. So our priority is to test the RADAE waveform against our requirements, and fortunately the Python version is fast enough for that already.

Over the years we’ve discovered many ways to break digital voice systems. These issues are much easier to fix in simulation so I’ve developed many intricate automated tests, for example tests that simulate slowly varying, stationary channels, and other tests that simulate fast fading like the northern European winter. Do carriers (sine waves) in the middle of a RADAE signal cause it to fall over or make it sync by accident? What happens if the Tx and Rx stations have slightly different sample clock frequencies? I won’t bore you with the details here, but a lot of work goes into this stuff.

While giving RADAE a hard time in simulation I tried the mulitpath disturbed (MPD) channel. This has 2 Hz fading and 4ms delay spread, and is encountered in Winter at high latitudes (e.g. NVIS communications during the UK Winter). It’s tough on HF modems. The mission here is “do not fall over with fast fading” – it’s OK if a few more dB of SNR is required. Here is a sample of what the off air received signal sounds like at 3dB SNR, followed by the decoded audio.

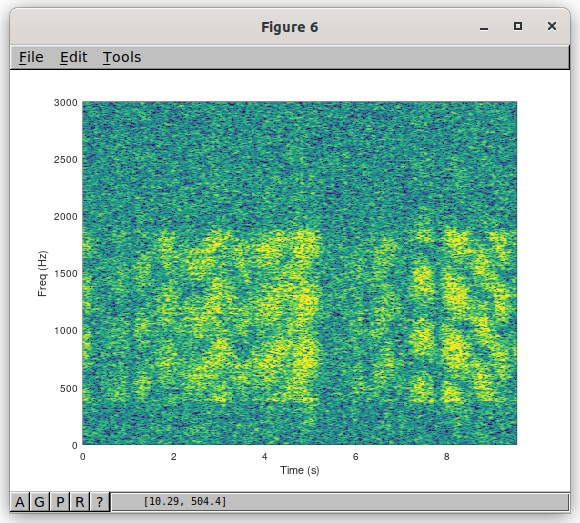

Despite the received signal dipping into the noise at times, RADAE seems to handle it OK. I designed the DSP equalization to handle fast fading, but only trained the ML network with a simulation of 1 Hz fading. So I was concerned the ML might fall over but this time we got lucky! Here is the spectrogram of the same signal – at times the fading completely wipes it out.

One innovation is an “End of Over” system. When a transmission ends, an “end of over” frame is sent and the Rx cleanly “squelches” the receive audio. Previous FreeDV modes would run on for a few seconds making R2D2 sounds, as from the receivers perspective it’s hard to know if the transmitter has finished or you are just in a fade.

On another topic this month I also set up a new WordPress host for this site, and spruced up the content a little. I’m more at home with DSP than SPF and MX records but with the kind support from VentraIP I got there eventually. Thanks Bruce Perens for hosting this site for the last few years.

If you are interested in helping out with the RADAE work I have been building up a list of small chunks of work that need doing using the GitHub Issues system. Many of them require general GitHub/C coding/Linux skills, and not hard core DSP or ML. I’ve listed the skills required in each Issue. Please (please!) discuss them with me first (using the Issue comment system) before kicking off your own PR – I have a really good idea what needs to be done and we need to stay focused.

I have written a test plan for the next phase of over the air (OTA) RADAE testing. The goals will be (a) crowd sourced testing of the latest PAPR-optimised waveform over a variety of channels using the stored file system (b) test real time, PTT conversations over real radio channels using RADAE. This will build our experience and no doubt uncover bugs that will require some rework. I’m on track to start this test campaign in August.

Hi David, The first sample, was just noise, no voice. However, the second sample, was just FANTASTIC, with no noise, all voice!!! 73, Trippy, AC8S

Hi Trippy – the first sample was the off air RADAE signal, not SSB. So you wouldn’t expect to hear voice. The idea was to show the effect of the fast fading multipath disturbed channel on the RADAE signal.

You might consider a Rust port rather than a C one, it’s callable from C and prevents an entire host of errors that are all too easy to code in C. Rust code is re-entrant (or it won’t compile), protects against most sources of memory leaks, and understands aliasing better than C. There is a learning curve, it took me a few days, and embedded Rust is still immature enough that my ESP-32 systems code will all be C while things like CODECs and modems can easily be Rust. It is also possible to compile Rust to Webassembly, and running that in the browser or Node is going to be at least as fast as Python, and portable to almost anything.

Hi Bruce – TBH I’d be happy to keep 95% of it in Python/numPy/Pytorch, if we can get that to build across all platforms. With perhaps just the core ML in Rust or C. The core ML is just a bunch of matrix multiplies (just big matrices). It’s just so much easier to develop in a domain specific language, and this is “experimental radio” where we need an efficient way to forge ahead. Given we need a 1GHz multicore CPU (e.g. a Pi or android device) is likely we’ll have an operating system and mainstream distro anyway.

As usual I am over my head on the math, so excuse me if I blabber. But we are seeing a lot of embedded tensor processors, and if I understand that correctly, that is a higher order form of matrices. So this may not have to run on desktop forever.

I don’t know if you start in frequency domain. Karn has had a lot of success with a new wrinkle on fast convolution. https://www.iro.umontreal.ca/~mignotte/IFT3205/Documents/TipsAndTricks/MultibandFilterbank.pdf